Embodied AI and Visual Navigation challenge

Published:

A challenge to navigate to a specific pose represented by First Person View (FPV) on all four directions of the agent with just the current FPV as the input.

Published:

A challenge to navigate to a specific pose represented by First Person View (FPV) on all four directions of the agent with just the current FPV as the input.

Published:

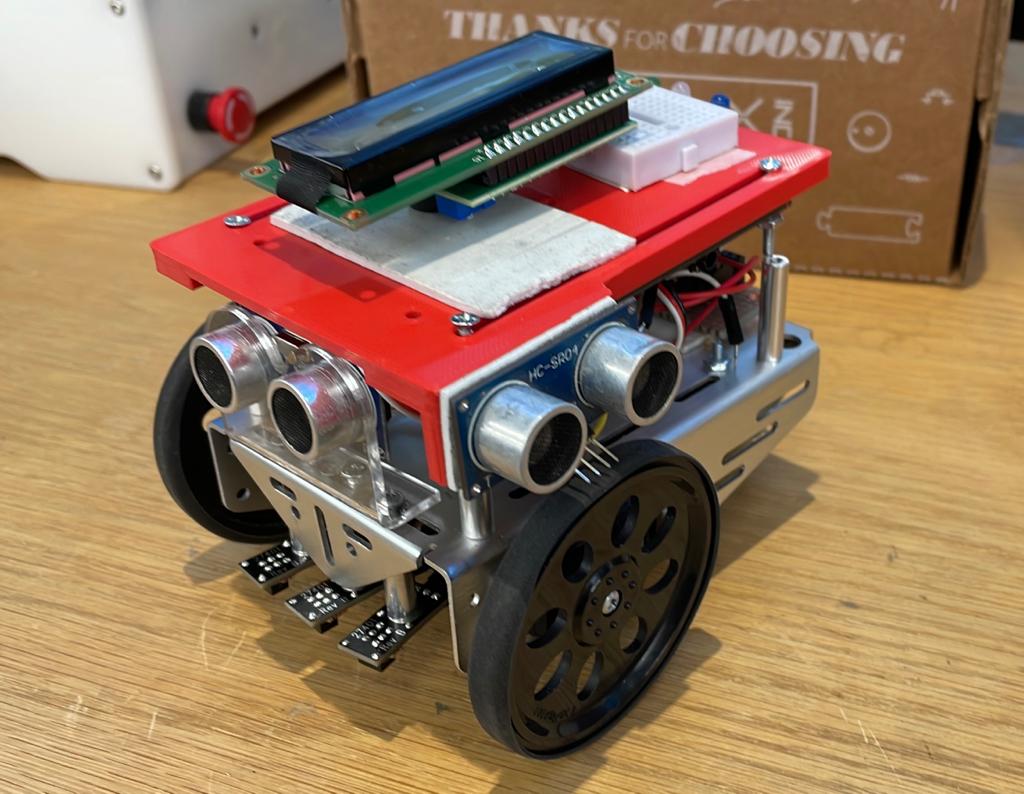

An Automated Ground Vehicle made to explore a pre-defined shopfloor layout with dynamic obstacles and locate widgets and navigate to its end position indicating the POC of transporting the widget.

Published:

An Animatronic Hand that mimics hand gestures via remote actuation commands. Done during the NJIT Hardware Hackathon, Byte into Hardware.

Published:

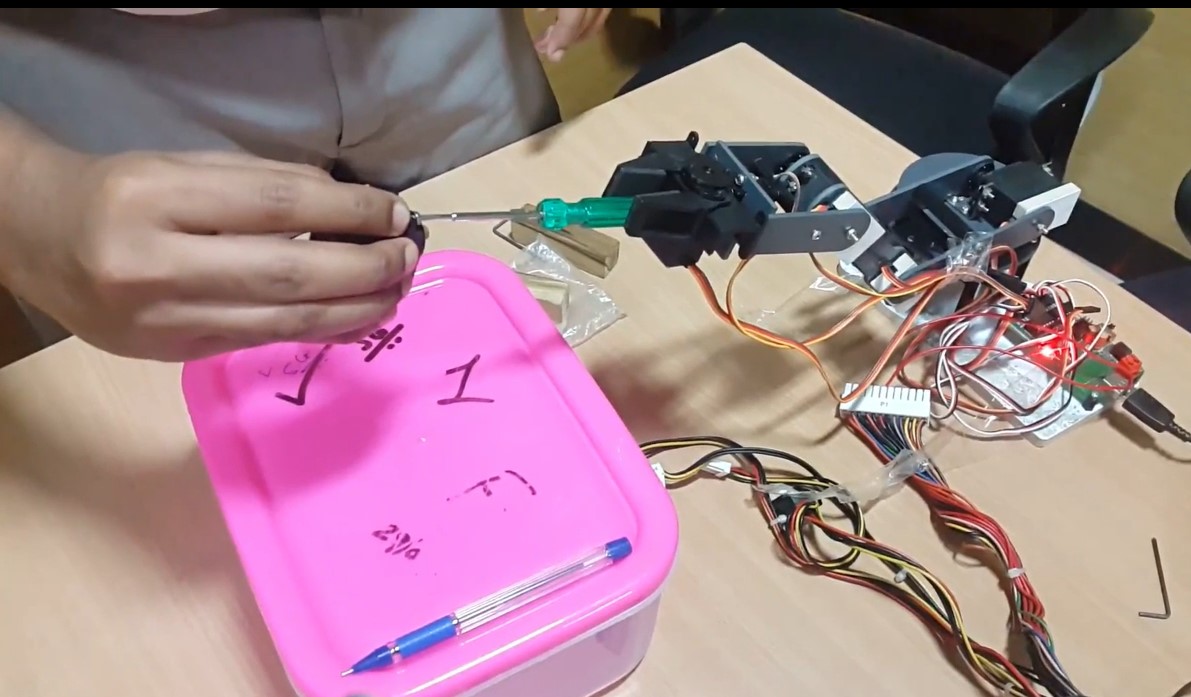

As an attempt to expose ourselves to manipulators during the job training module, we made an open-loop control 6DOF arm capable of screwing in fasteners.

Published:

A portable corneal topographer to increase the accessibility of eyecare in rural areas. The objective was to develop a surface map of the cornea to map out the surface irregularities.

Published:

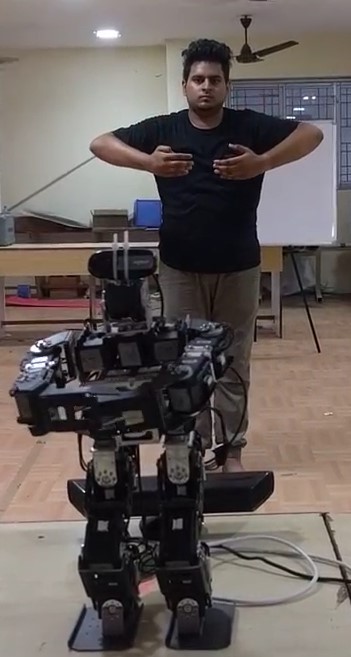

Building on the upper body teleop project, an attempt to track the whole body was performed and the joint angles were replicated by the robot to shadow the operator.

Published:

Replica of Robotis OP3.

Published:

A humanoid chatbot capable of basic Voice and Text based interaction.

Published:

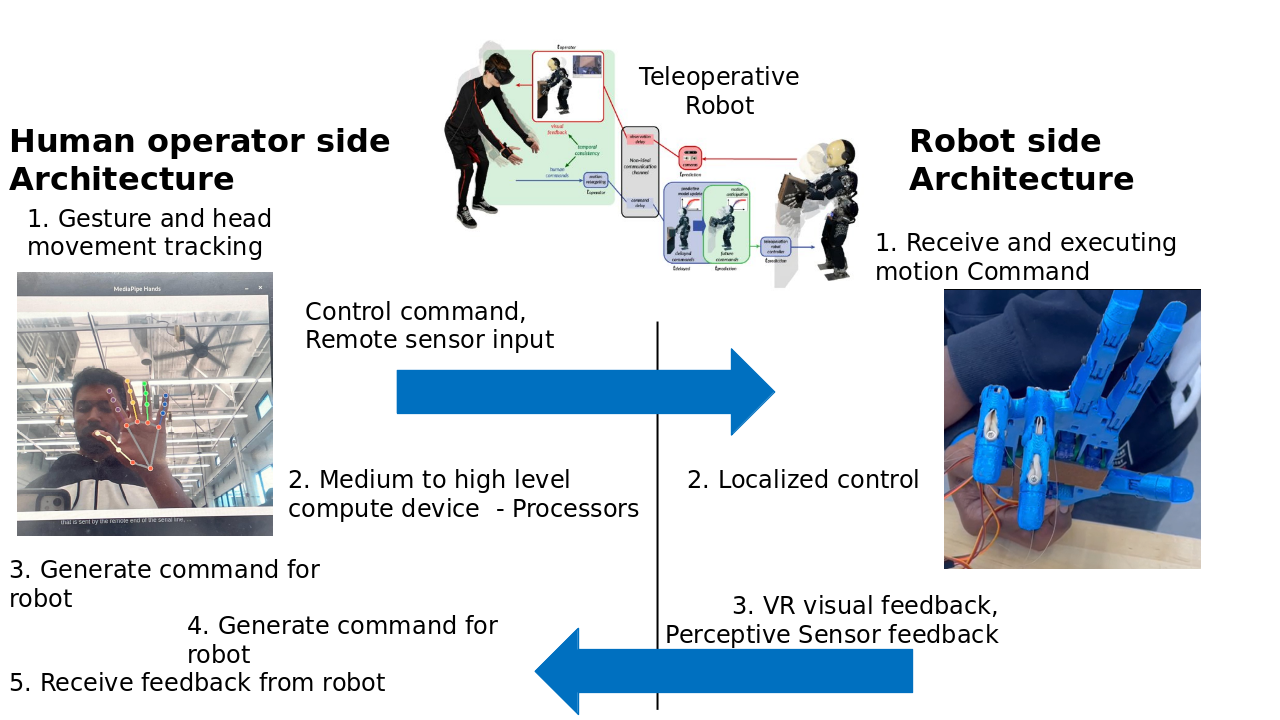

The project aimed in building a robotic human upper body and give it the ability to be operated remotely. The operator movements are captured and fed as the input to the robot and the robot’s visual field was used as an input to the operator via VR.

Published:

The project was made as an attempt to implement an assistive tech for people disabled neck down. Here, the direction of the eye is supposed to command the directional movement of a manipulator while the manipulator manipulates the object of interest. ![]()

Published:

The goal of the project was to track an object of a specific color and aim to shoot it when it reaches a specific threshold.

Published:

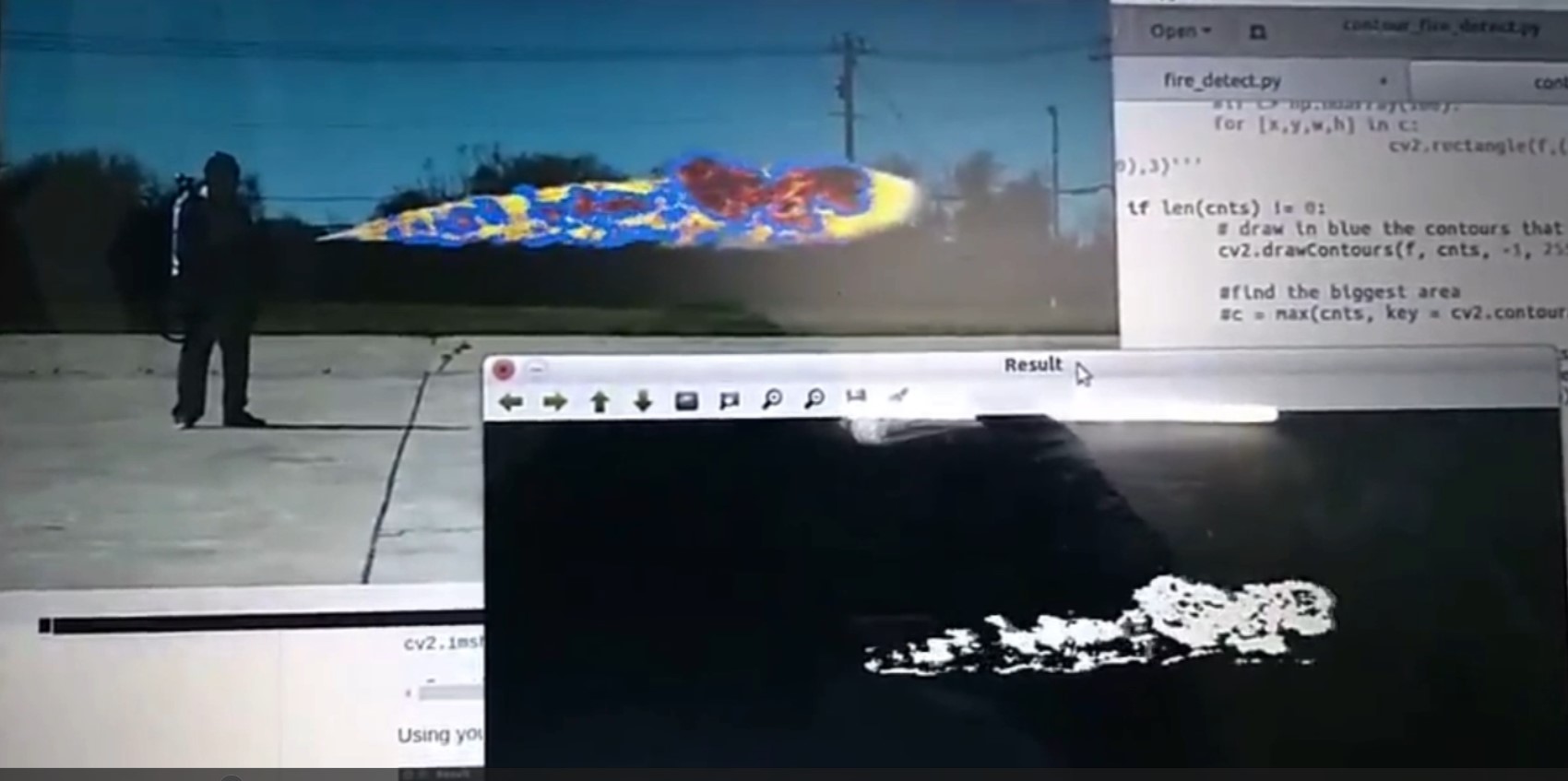

The aim of the project was to develop a Computer Vision algorithm to detect fire from video feed. This can be used in public places with surveillance cameras to monitor and notify any cases of fire breakout.